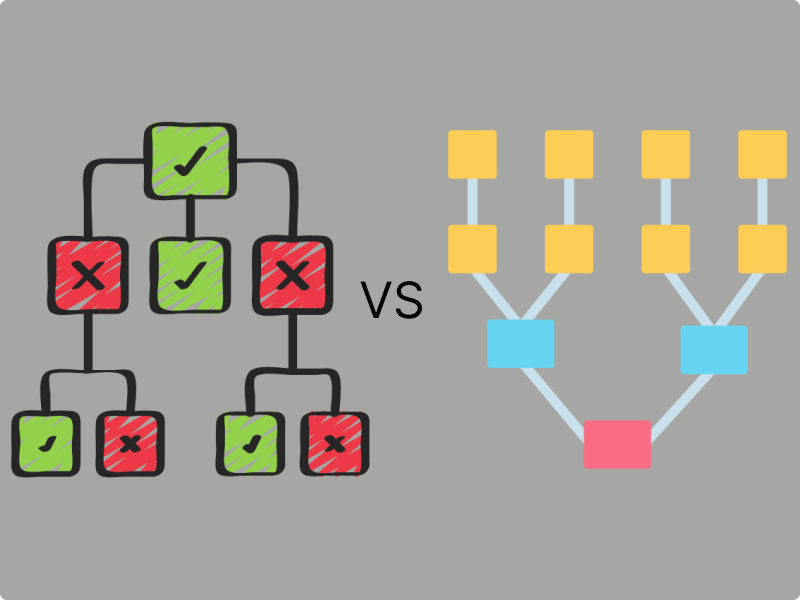

If you are new to machine learning, you may have come across the terms random forest classifier and decision trees and are now curious about these two algorithms. Decision trees and random forest classifiers are related but not the same. In this article, learn the differences between the two:

Decision Tree:

A decision tree is a hierarchical structure representing a sequence of decisions leading to a final decision or outcome. Each internal node of the tree represents a decision based on the value of a feature, and each leaf node represents the outcome (prediction).

-

Construction: Decision trees are created by repeatedly splitting the data into subsets based on the feature values. The splitting process continues until it meets the stopping criterion, such as when it reaches the maximum depth or purity of the nodes.

-

Prediction: To predict a new data point, it traverses the tree from the root node down to a leaf node based on the values of the features, and the prediction is the majority class (for classification) or the average (for regression) of the instances in the leaf node.

Random Forest Classifier:

A random forest classifier is an ensemble learning method that operates by constructing multiple decision trees during training and outputting the mode of the classes (classification) or the mean prediction (regression) of the individual trees.

-

Construction: Random Forest uses a bagging technique (bootstrap aggregating) to create multiple decision trees. Each tree is trained using a randomly selected portion of the training data, and each split in the tree is analyzed with a randomly selected set of features.

-

Prediction: To make a prediction, each tree in the forest independently predicts the class (for classification) or the value (for regression) of the new data point, and the final prediction is determined by aggregating the predictions of all trees, such as majority voting for classification or averaging for regression.

Difference:

The main difference between a decision tree and a random forest classifier lies in their construction and prediction process:

-

Single vs. Ensemble: A decision tree is a single tree structure that makes predictions based on a set of rules learned from the data. In contrast, a random forest classifier consists of multiple decision trees trained on different subsets of data and features, and the final prediction is made by aggregating the predictions of all trees.

-

Overfitting: Decision trees are prone to overfitting, especially with deep trees that capture noise in the data. Random forest classifiers mitigate overfitting by constructing multiple trees and averaging their predictions, resulting in a more robust model.

-

Bias-Variance Tradeoff: Decision trees have high variance and low bias, which can lead to overfitting. Random forest classifiers reduce variance by averaging multiple trees, leading to better generalization performance.

Conclusion

In summary, while decision trees and random forest classifiers share the same principle of using trees for prediction, random forests are an ensemble of decision trees that provide improved performance and robustness by aggregating predictions from multiple trees.

If you found this article helpful consider subscribing and sharing.

Thanks for reading.