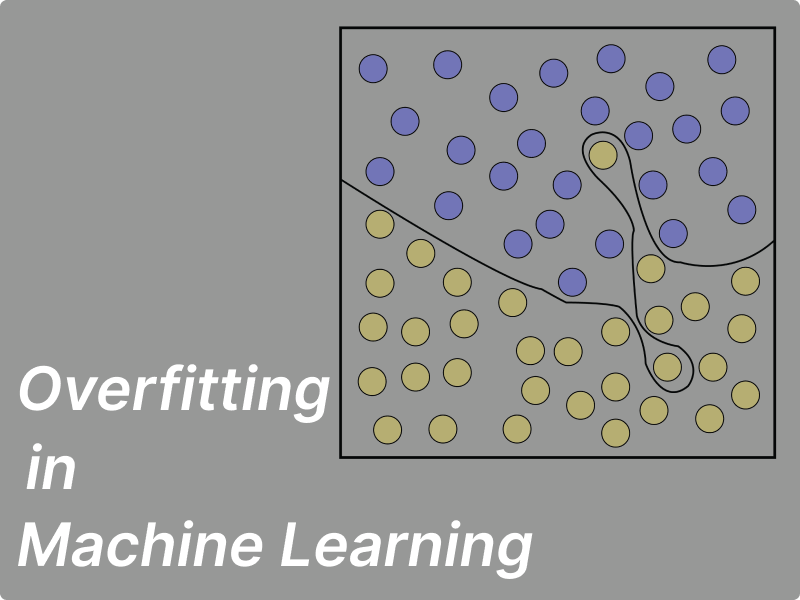

Overfitting is a common problem in machine learning and deep learning where a model learns the training data too well, to the extent that it captures noise or random fluctuations in the data as if they were meaningful patterns. In simpler terms, an overfit model learns not only the underlying patterns in the data but also the noise present in the training dataset and despite performing well on the training data, the model fails to generalize data new to the model.

What is Overfitting?

Overfitting occurs when a model becomes too complex relative to the amount and nature of the training data, essentially memorizing the training data rather than learning the underlying relationships that generalize well to new data.

Where Overfitting Affects Models:

Overfitting affects the performance of models in various ways:

-

Performance on Unseen Data: Overfit models tend to perform poorly on new, unseen data because they have essentially memorized the training data and cannot generalize well.

-

Model Interpretability: Overly complex models can be difficult to interpret, as they may learn intricate relationships that are specific to the training data and not generalizable to new data.

-

Resource Consumption: Overfit models may require more computational resources and time for training due to their complexity.

When Overfitting Occurs:

Overfitting can occur in various machine learning and deep learning scenarios:

-

Complex Models: Models with a large number of parameters relative to the size of the training dataset are prone to overfitting.

-

Noisy Data: Datasets with high noise or irrelevant features can exacerbate overfitting.

-

Small Datasets: When the size of the training dataset is small relative to the complexity of the model, overfitting is more likely to occur.

Drawbacks of Overfitting:

-

Poor Generalization: Overfit models fail to generalize well to new, unseen data, leading to inaccurate predictions in real-world scenarios.

-

Model Instability: Overfit models may be highly sensitive to small changes in the training data, leading to unstable predictions.

Advantages of Overfitting:

While overfitting itself is undesirable, it can sometimes provide insights into the characteristics of the data. For example, overfitting a small dataset in deep learning can help researchers understand the model's capacity to learn complex patterns.

Why Overfitting Occurs:

Overfitting occurs when a model becomes too complex relative to the amount and nature of the training data. It happens because the model is trying to minimize both bias and variance, but it ends up prioritizing reducing bias too much, leading to overfitting.

Ways to Improve Models Against Overfitting:

-

Simplifying the Model: Use simpler models with fewer parameters to reduce the risk of overfitting, especially when working with small datasets.

-

Regularization: Regularization techniques, such as L1 or L2 regularization, penalize large weights in the model, preventing it from becoming overly complex.

-

Cross-Validation: Utilize cross-validation to assess the generalization performance of the model and identify potential overfitting.

-

Feature Selection: Choose relevant features and eliminate irrelevant or noisy features to reduce the complexity of the model and mitigate overfitting.

-

Data Augmentation: Increase the effective size of the training dataset through techniques such as data augmentation, which generates additional training examples from existing data.

-

Early Stopping: Monitor the model's performance on a separate validation dataset during training and stop the training process when the performance starts to degrade, preventing the model from overfitting.

-

Ensemble Methods: Combine predictions from multiple models trained on different subsets of the data to reduce overfitting and improve generalization.

Conclusion

Overfitting is a common challenge in machine learning and deep learning, where models become too complex relative to the training data. It leads to poor generalization performance and unstable predictions. By employing techniques such as model simplification, regularization, cross-validation, and data augmentation, you can mitigate overfitting and improve the performance and robustness of your models.

Consider subscribing and sharing if you enjoyed the article. Thank you for reading.